Blog Archives

Red Gate Virtual Restore Scripting (Part 10)

If you have been following along since December, then you know that I have been doing some investigation with the Red Gate Backup and Restore bundle to implement in our system with our use of Ola Hallengren’s backup maintenance scripts. One of our last stumbling blocks has been automating and scripting of the Red Gate Virtual Restore product. We have moved methodically through the list and with the help of Red Gate’s excellent support department resolved each item including this one.

The problem we had been that when we tried to verify the backup through restoration and DBCC CHECKDB on a certain database, that was considerably larger than all of the other databases on this server, we would not have enough space on the drive using our restore script we created last week. That scripting solution is great as long as there is enough space on the drives to attach the database files from backup and then drop them.

The beauty of the Virtual Restore product, in case you did not already know, is that it can restore these files with a much smaller footprint than the actual database files take up in the exiting format. However, the problem is that it is strictly a wizard at this point that will grab a specific backup and run the restore manually and then drop it once it checks the integrity and consistency. This is a great product but this is feature should be included or else it does not help much especially when you have hundreds of databases.

We ran the wizard and then viewed the script:

RESTORE DATABASE [TestDB_Virtual] FROM

DISK=N’I:\TestDB\FULL\SQLPROD40_TestDB_FULL_20130101_213217.sqb’

WITH MOVE N’TestDB_Data’ TO N’F:\VirtualRestore\TestDB_Data_TestDB_Virtual.vmdf’,

MOVE N’TestDB_Index’ TO N’F:\VirtualRestore\TestDB_idx_TestDB_Virtual.vmdf’,

MOVE N’TestDB_Log’ TO N’F:\VirtualRestore\TestDB_Log_TestDB_Virtual.vldf’, NORECOVERY, STATS=1,REPLACE

GORESTORE DATABASE [TestDB_Virtual] WITH RECOVERY, RESTRICTED_USER

GODBCC CHECKDB ([TestDB_Virtual])

GODROP DATABASE [TestDB_Virtual]

GO

This script did not work when we ran it via T-SQL because of a lack of disk space which is the same problem we encountered using the Backup Pro restore script, however it did work with the Red Gate Virtual Restore Wizard. We contacted support to find out why there was a difference on the same box. Basically SQL Server does not know that the HyperBac service is running in the background. The wizard is smart enough to check your disk space and if you do not have enough it temporarily adds an entry into the HyperBac configuration that tricks SQL Server into thinking that you have the right amount of disk space in order to complete the task.

The parameter is “VirtualDiskSize=” where the value is an integer to represent the disk size in megabytes. You add the parameter to the bottom of the to the hyper.conf file found in the C:\Program Files (x86)\Red Gate\HyperBac\Bin folder. At this point then you restart the HyperBac service and the problem is solved! Next I will try to automate this script….stay tuned!

Red Gate Backup Pro Continued (Part 5)

Continuing in my series on the Red Gate Backup Pro 7.2 utility for SQL Server, I tried yesterday rather unsuccessfully to set up a development server to do all of my virtual restores in order to verify all of my backups for my production servers. It works great on this particular development server for the backups on this particular server. However, the ability to do this across servers is complicated with a complex array of directions with advanced security options that really obfuscates a simple idea. Can it be done, Red Gate says yes with their directions provided on their website amongst them this link and this link in addition to their security model.

Maybe I have missed something, I am not sure. But it looks to me that the security is far more advanced than it needs to be for the idea of a ‘virtual restore’ and I do not want a simple AD account to have so many privileges. Maybe I will step back and approach it again after the holidays.

However, if that falls through I will just set this up on each production server. I am also trying to script all of these virtual restores into a single script that grabs all of the databases instead of hard coding each individual database in the script. This will automate my maintenance such that when new databases are created, I will not have to worry about setting them up in the restore script. I have some ideas but have not been able to get them to work yet. Press on and enjoy!

Some Additional Findings on Red Gate Backups (Part 4)

I just wanted to point out a couple of things I noticed after a weekend of Red Gate SQL Backup Pro compressed backups and virtual restores on our development servers:

- The <database_name>_Restored database generates a few SQL Monitor alerts for all of the newly restored temporary databases that are eventually dropped after the DBCC CHECKDB is run against them. On our system we got up to five notifications per database:

- Database file size increased (Custom metric)

- Rate of write transaction/sec increased (Custom metric)

- Custom metric collection error

- Page verification

- Database unavailable

- When using a plain jane AD account to install and run the backups, you need to go into each individual database and add that user to the ‘db_backupoperator’ database role. This is not necessarily Red Gate’s fault as SQL Server does not have this at the instance level (I still do not understand why, but maybe I am just an idiot), however the Red Gate tool maybe should have warned me about this at some point instead of cryptic error message on failure, in my opinion. The Red Gate security model states that the account needs to be part of the sysadmin fixed server role which goes against best practices (if you go further down it does give a workaround like I suggested but I didn’t make it that far the first time I read the page, nor the second).

- There was a few servers that I had difficulty installing the agent onto unless I used an admin account even though they had the ‘log on as a service’ and sysadmin role on the regular account as well as permissions on all of the folders. I could then switch the service back to that account after installation and the service starts fine. They might have been missing logon locally. I will try that on the next server to test it.

I will keep this thread running as it appears there are some other issues. Enjoy!

Deadlock Graphs

Last night I learned about graphical deadlock graphs from Fargham Butt of Microsoft. I had simply used the traceflag and then read the error logs to find the information. Thus today I thought I would write a blog about the subject and decided to do some research first when I found an excellent blog post by Jonathan Kehayias (B|T) covering Handling Deadlocks in SQL Server. Jonathon is a Microsoft Certified Master and does a much better job than I can do explaining the whole subject, so I thought it more prudent to just refer you to his blog. Give it a whirl and enjoy!

Troubleshooting Skills

One of the hardest concepts that new IT workers struggle with is the ability to troubleshoot. In my seventeen years in the field, I have never seen two employees tackle a problem in exactly the same manner. Troubleshooting is an art form derived out of experience and education.

You can learn how to troubleshoot various problems in a certain manner, but you have to be ready to switch gears and go another direction based upon the results of each step in your logic. Mentoring can help shape the way in which you tackle problems, but it is essentially your style which will determine how you logically break down problems and solve them step by step. The ability to troubleshoot will help determine the level of success in the IT field. What do you think?

SQL Nexus, Perf Stats Report

Continuing our SQL Nexus journey, I noticed another item with the Perf Stats reports. As I mentioned in a previous post, I imported SQL Server 2008 R2 data into SQL Nexus. However when I choose the “SQL Server 2008 Perf Stats” report I get the “The database doesn’t have necessary data to run this report” dialog box and when I choose the 2005 report, it opens.

Here is where it gets interesting, if I right-click on the report I can select the report parameters. The only parameter is version and the value is 2005. That’s understandable, so I manually tried to change it to 2008. Voila! The report refreshes and now the report title reflects the new value. This report gives you three choices: Blocking and Wait Statistics and Bottleneck Analysis, which both have their own reports on the menu, and Spin Lock Stats. It appears that changing the parameter only changes the title of the report. I think next week I will crack open these two reports to see why the 2008 data loads in the 2005 report and not in the proper one and what the parameter really does. Until then, enjoy your weekend!

SQL Nexus, Read Trace Errors Resolved!

Last Monday we started our SQL Nexus journey together in order for me to refresh my knowledge of this awesome tool. Near the end of last week, the blog series was delayed due to an error with the ReadTrace and its corresponding report. Yesterday and again today, I am troubleshooting our attempt to resume our journey but with little hope for correcting the error. I have posted the error on CodePlex and am awaiting a response.

Yesterday, I referenced a “ReadTrace exit code: -2” error in the ReadTrace log file. I did find one page out there on SQL Server Central where Grant Fritchey (Twitter | Blog) had encountered the problem. After contacting, Grant he told me that the problem that he had was a simple one, he did not have the file and path name correct which corresponded with the file not found error higher up in the ReadTrace log. However, when cutting and pasting this file straight out of the log, the file opens. Strange indeed, but Grant got me thinking and thus reading through all of the files looking for a possible mistake in the scripts. I could not find any.

Keep in mind, this is with running the StartSQLDiagDetailed_Trace2008R2.cmd performance script that I outlined in one of the previous posts. This simply reference XML files that appear to only outline the performance indicators in which we want to monitor. It is in the MSDiagProcs.sql script that it identifies the trace files to load and run. In addition, none of the three scripts will load trace files into the import.

Here is a sample of what is called from the CMD scripts:

H:\PerfScripts\2008R2>”C:\Program Files\Microsoft SQL Server\100\Tools\Binn\SQLdiag.exe” /I “H:\PerfScripts\2008R2\SQLDiagPerfStats_Trace2008.XML” /O “H:\PerfScripts\2008R2\SQLDiagOutput” /P

However, attempts to run this manually through ReadTrace also failed with one of the trace files created by the performance script, such as:

H:\Program Files\Microsoft Corporation\RMLUtils>readtrace.exe -I”H:\PerfScripts\2008R2\SQLDiagOutput\SQLVMDEV10_SQLDIAG__sp_trace.trc” -o”C:\Users\crssqladmin\AppData\Local\Temp\2\RML” -SSQLVMDEV10 -dsqlnexus -E -T18 -f -H!PSSDIAG

However, playing with the file names yielded a different problem. When I renamed the file taking out the double underscore, ‘__’, thus resulting in SQLVMDEV10_SQLDIAGsp_trace.trc and SQLVMDEV10_SQLDIAGsp_trace_blk.trc, which seemed odd to me from the beginning, the ReadTrace load now indicated a permissions error. When I added my administrative account to these two trace files, the ReadTrace worked. Hmm, weird but interesting. I wonder now if the SQL Nexus import would load? See for yourself:

Enjoy and I hope this helps if you encountered the same issue!

SQL Nexus, Read Trace Errors

Last Monday we started our SQL Nexus journey together in order for me to refresh my knowledge of this awesome tool. Near the end of last week, the blog series was delayed due to an error with the ReadTrace and its corresponding report. Today, I am still attempting to resume our journey but with little hope for correcting the error. I have posted the error on CodePlex and am awaiting a response.

The first error I received when loading the trace files in was error 26 which many people translated into a permissions problem on the trace file. I resolved that issue by granting full control to my admin login and SQL Server when that did not resolve the issue. Now I am receiving a “ReadTrace exit code: -2” error in the ReadTrace log file.

At this point, I can find no helpful information on the interwebs. I do find it interesting the that log file also denotes that it cannot find the trace file that it is trying to load, however when I cut and paste the file in question right from the log file it opens in notepad so I am not sure that the error is entirely accurate. I checked the permissions on the trace file and it is consistent with the other files. I’m stumped.

Has anyone else seen this?

SQL Nexus, Data Collection

Monday we started our SQL Nexus journey together in order for me to refresh my knowledge of how to use this awesome tool. On Tuesday, we installed some essential pieces in order to get the SQL Nexus tool installed and ready to use. We detoured on Wednesday to attend the SQL Server 2012 Technology Days at Microsoft in Tampa. Today we return to the fun, so let’s jump right back in!

I realized this morning that I left out another download, the SQL Server 2005/2008 Performance Statistics collection scripts. This has the XML configurations needed to run SQLDiag in order to load performance data into SQL Nexus. Well let me qualify that, these XML files are pre-configured and they can be modified if necessary. These configurations will capture DMVs and also blocking information that can help you troubleshoot performance problems. It will also collect machine information from Perfmon, msinfo32, various event logs, and the profiler trace (unless you run the NoTrace command script). There is more detailed information on customization available on the CodePlex download page (make sure you read the fine print about the download location as it is not the big download button on the right).

Once you run one of the scripts, such as StartSQLDiagDetailed_Trace2008R2.cmd, you will see several lines of preparatory messages followed by “SQLDIAG Collection started. Press Ctrl+C to stop.” At this point you can reproduce your issue, if possible. If not possible, then collect data for a few minutes and then press Ctrl+C to finish the collection and generate the output necessary for SQL Nexus. Be vigilant of running this as the output can grow very large very quickly especially if you running this against a production server. Be careful! Once the dialog closes, then the output is ready to load into SQL Nexus.

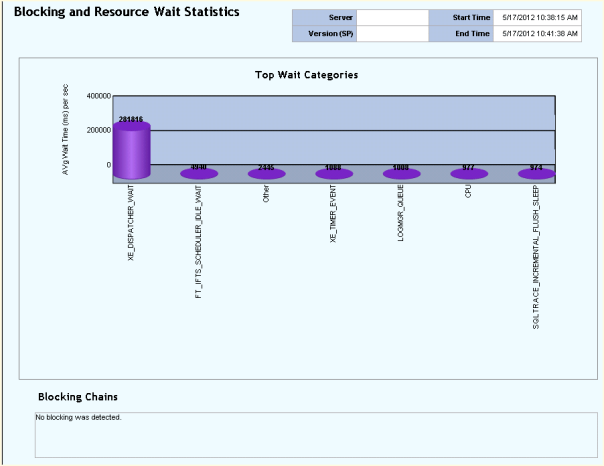

Now open SQL Nexus and we can import the data that is output from SQLDiag from the output folder that is generated underneath the folder where you ran the script from, by default. From there we can run some of the reports located on the upper left corner of the interface. Not all of them will apply depending upon what data you collected and which script ran. Below is a sample of the Blocking and Wait Statistics report. This was just a sample ran for this blog, so unfortunately I did not having any blocking issues to show you. I hope this helps your understanding of SQL Nexus. Enjoy!

Sometimes It’s Best Not To Monkey Around

Recently I found a poorly performing server and I could not figure out why with all of the usual troubleshooting techniques. Something told me to look at some of the configuration settings and there it was shining like a crazy diamond. The sp_configure option ‘max worker threads’ was set to a value of 16. Seriously?

Now my curiosity was piqued. There are experts who change settings like this because they know what they are doing. Generally, I do not monkey around with these types of settings unless I have a really good reason to do so, and no a vendor telling me to do it is not a good reason unless this SQL Server is dedicated solely to them, which it is not! Best practices says to leave this at zero so that SQL Server can manage the number of worker threads for optimal performance. Who am I to think that I know better than some really smart guys from Redmond? After doing some light reading on the subject, I learned that too few threads can cause “thread starvation” where there are not enough threads to service the incoming client requests resulting in poor performance.

In addition, setting this value too high can waste memory and also be detrimental to performance. In conclusion, do not monkey around with the settings unless you know what you are doing. That is all!

Enjoy!