Blog Archives

Pre-Con: Performance Tuning Training in Spartanburg, SC for #SQLSat431

On Friday, September 25th, 2015, Mike Lawell (b|t) and I will be giving our “Getting the New DBA Up to Speed with Performance Tuning” pre-con training for the inaugural SQL Saturday Spartanburg. We are extremely excited to be presenting this training again this year after the tremendous feedback we received earlier in Nashville. We have a passion for the SQL community and helping DBAs and developers to do their job better. We want to help you too! If you’ve never taken a pre-con before a SQL Saturday, it is a great way to get some high quality training at a low price and in your local area. Register here today!

In this session, we will take an in-depth look at performance tuning for the beginning DBA as well as the “Accidental DBA” in order to help prepare you for beginning to intermediate skills in tuning your real world queries. We will show you how to get started when you get the production support call stating that the database is slow. We will cover the basics of reading query execution plans as well as using dynamic management views in order to diagnose poor performance. We will also cover performance analysis tools and performance troubleshooting as well as some great demos to get you up and running tuning queries.

Prerequisites: Basic understanding of T-SQL and the SQL Server relational engine

Sections:

- An overview of server configuration best practices will be discussed along with key tools that can be used to identify performance problems.

- Several DMVs will be covered that can be used for performance data collection and diagnosis of performance issues. Third party free tools that use these DMVs will be demonstrated for the data collection.

- Common performance issues will be discussed along with the methods that can be used to identify the issues and resolve.

- The final section will look at the graphical Execution Plan basics and how to identify potential performance issues.

We are planning on a day filled with fun and adventure! Let us help you become a better DBA! Not a DBA? No problem! This is also an excellent training for developers who are writing queries in T-SQL!

Register here!

If you cannot make it to the pre-con, then make sure you check out our regular sessions on Saturday. Register here! Enjoy!

Grody to the VARCHAR(MAX)

This morning I was looking through a new vendor database to see exactly what I would be supporting and I stumbled upon a curious table. This table seemed quite normal to the naked eye until I glanced upon the data types. There were four, count them four fields set to VARCHAR(MAX) and two set to NVARCHAR(MAX). First of all, why the inconsistency in the data types? Maybe there is a legitimate reason, but I am not buying it. If you need Unicode support provided by the NVARCHAR data type wouldn’t you need it across all fields? I have only worked with a few databases that needed Unicode support and that was the case for them. Maybe there is a case for mixed usage, but I do not understand it and obviously I was not part of their design team.

Now onto the bigger elephant in the room, why on earth would you have all of these fields set to the maximum storage amount of two gigabytes (1GB for NVARCHAR taking up 2GB of space). Are you really storing that much data in six fields of EACH record? Keep in mind that SQL Server stores records in 8K pages. When you exceed that the data goes to an overflow page with a pointer so that SQL knows where to find the rest of the data. I understand that it will not take up that much space if you are not actually storing that much data, but there is a potential here for these table to be problematic. Granted this might be a good design for one field if it stores a large amount of text or notes, but six fields in the same record? I looked at the length of the records currently entered for these six fields and I found nothing larger than 100 characters. Overkill? What do you think?

Enjoy!

Shrink That Database…Seriously?

Yesterday afternoon my storage administrator came to me and asked me to look at his Enterprise Vault database and make sure that they were receiving the proper maintenance because he was running a routine over the weekend to remove some e-mails that had exceeded their retention period and it was painfully slow. He was only about to delete about a half-million e-mails over the course of the entire weekend. It is even more interesting because when I checked the databases yesterday all of them combined did not exceed 100 GB.

Yesterday afternoon my storage administrator came to me and asked me to look at his Enterprise Vault database and make sure that they were receiving the proper maintenance because he was running a routine over the weekend to remove some e-mails that had exceeded their retention period and it was painfully slow. He was only about to delete about a half-million e-mails over the course of the entire weekend. It is even more interesting because when I checked the databases yesterday all of them combined did not exceed 100 GB.

The administrator proceeded to call Symantec and spoke with an engineer who directed him to the following page. He then forwarded the link and asked me to make sure I was following their best practices listed on their site. No problem, I would be glad to compare their recommendations to our maintenance regimen.

I have included a screen shot just in case the page disappears…

Needless to say, I did not modify my maintenance regimen to include the deprecated Shrink Databases recommendation. If you are fine with this item, then you really need to read Paul Randall’s blog entry on why you should NEVER shrink your database. He explains much better than I do and he is the expert. Maybe someone out there knows someone at Symantec and we can get them to read the blog, attend a SQL Saturday, PASS Summit, or even Paul’s Immersion Event training. Someone please get them some help! Enjoy!

Corruption and Verifying Backups

This morning I had a conversation with a Subject Matter Expert and Application Administrator who asked me quite simply for a verified backup before he does an install on Saturday. My response was that I could test it manually for them and I was willing to do so but currently with our infrastructure the nightly backups are not tested without manual intervention. I have tried to change this policy for some time and unfortunately it may not occur until something bad happens. With this in mind, I do the best I can to minimize the risk given that I cannot automate a solution to restore databases on a regular basis as I would prefer. How can you minimize the risk?

- Use the WITH CHECKSUM option on your backup scripts. Read Paul Randall’s The Importance of Validating Backups article on SQL Server Central for more information.

- Use the RESTORE VERIFYONLY command (although we use Ola Hallengren’s Backup Solution which is the verify option which runs the command).

- Use the WITH CHECKSUM on the RESTORE command to recheck the page checksums in the backup as well as the backup file.

- Automate or manually restore your databases from scratch on a regular basis. (This is where we are lacking and where I want to take my servers).

- Once you have restored, then run DBCC CHECKDB against the restored database.

If you are not doing all five then you cannot say confidently that your backups are verified. However, if you are doing all five keep in mind that there is no fool proof guarantee against corruption, this merely minimizes the destruction by having viable backups. I hope this helps….Enjoy!

I Survived!

Well my first SQL Saturday presentation has been completed. No one was injured, I didn’t respond to my flight (as in fight or flight) instincts, and no one heckled me. It didn’t go as well as I imagined but it was a learning experience. I will get better, so if you attended my session today, I apologize, but thank you for being my guinea pigs.

Well my first SQL Saturday presentation has been completed. No one was injured, I didn’t respond to my flight (as in fight or flight) instincts, and no one heckled me. It didn’t go as well as I imagined but it was a learning experience. I will get better, so if you attended my session today, I apologize, but thank you for being my guinea pigs.

To be honest, I think I did better than my lightning round presentation last June. I understand that it is an evolutionary process, but I was confident that I was more evolved than I really am.

If you’ve never presented a topic, I highly recommend it. Enjoy!

Keeping Track of Details

After creating a few new SQL accounts this morning, I thought I would share how I keep track of minor details like this. We use SharePoint for our department to track documents, procedures, processes, etc. This is a great place to track minute SQL Server details like accounts and databases. For example, we have over 280 databases across 32 servers. Some of the applications may have five or ten databases and I cannot keep 280 databases in my head as far as where they are, what application they are used for, who the business owner is, and if they are production, acceptance, or development.

I setup a SharePoint spreadsheet (ok its called a datasheet, but it is just a spreadsheet), for tracking SQL user name and passwords and of course I limit the access on this sheet, in case you were thinking about that. This is handy so that we do not have to reset them when the application administrator forgets it and wants to reset it and throw the application into turmoil. I also setup a datasheet to track the name, server and the business owner so I know who to contact when a server goes down, who to contact when I need to do maintenance, in addition to knowing where the database is when a user reports an issue. This is a great organizational tool, I hope it helps you.

Do you do this differently? Enjoy!

AD Accounts Are Great, But…

Using Windows Authentication for your database is a great best practice to follow. However, one caveat to remember as one of my administrators found out today. Do not use that account as a login if it is also the account you use for your database.

We setup an AD account for his database, that part is great. He thought that was also the account to use as his application administrative account. Not a good idea because when he fat fingered the password this morning a few times, as people tend to do on Monday mornings when their coffee hasn’t fully kicked in, he locked out his application as well as the database sending 150 alert messages all over the department. This set off a chain reaction where he rebooted his server after unlocked the account and proceeded to call me and tell me that SQL was down and sounded the sky is falling alarm. He overreacted essentially.

When I figured out what he did, I explained the situation and told him that he needed another AD account for his application or I could setup another one for his database. He said he installed his application under that account and cannot change either one. Not the best scenario, but every time you fat finger the account you will bring the world down upon you. Your choice. He chose the road well traveled unfortunately. Enjoy!

Presentation Ponderings

After my first presentation at SQL Saturday Pensacola, I am ready to do another and need to submit for SQL Saturday Orlando later this month. All of that is not a problem, I just don’t know if I should do what I did in Pensacola and expand on it because the audience seemed to enjoy it or forge a new path. I have good arguments for both, maybe I will submit both and see which one gets picked, if they get picked, of course. I have three weeks to decide, but I should not wait until the last minute.

The presentation that I did in Pensacola was the Seven Deadly Sins of the SQL Server DBA. The other one I am thinking about doing is just a straight up best practices install guide. I am very passionate about best practices and there are so many people who I’ve spoken with said that they wish they had known some of the best practices at the time of their install instead of correcting them after the fact. It also may help that I was asked to give the Seven Deadly Sins at the next Tampa Bay user group meeting this month, so we will see how that goes, maybe it was a one-hit wonder and the decision will be made for me? What do you think?

Final Thought from SQL Saturday Pensacola Pre-Con

For my final blog about SQL Saturday #132, I wanted to leave you with one all-encompassing piece of advice that I received from the pre-conference training on Friday prior to the event. The pre-con titled “Demystifying Database Administration Best Practices” was presented by Microsoft Certified Masters Robert Davis (twitter|blog) and Argenis Fernandez (twitter|blog).

Of course, I will preface this advice with the fact that I am passionate about Best Practices and I also love using the Best Practice Analyzer from Microsoft to detect new violations of best practices. It is great to run on an instance when I am in a hurry and do not have time to go through a check list. I simply want to see if any new changes have been made since the last time I ran the tool also known as has anyone changed anything on the server without my knowledge. With that being said, I give you the advice.

“Do not always rely or trust the information from Best Practice Analyzer”

I do not remember if Robert or Argenis said this pearl and it really doesn’t matter because it is great advice for you and me. Do not mistake all of my previous posts on the BPA as gospel. It is a great tool, but it is an automated solution. It simply cannot know all of the specifics of your server, applications and environments. Hopefully you do!

This is the reason that you can choose to disregard some items so that you never see them again. I personally do not like doing that because if something changes in my department then I forgot all about the warning because I disregarded. If I see it is a warning then I know it is still there but there is nothing I can do about it.

Mixed mode authentication is a great example. We have to run it on some servers because of vendor applications but we are not happy about it. This will trigger a warning every time, but I do not disregard it because at some point that may change.

Have a great weekend and enjoy!

Green? If You Only Knew the Power of the Dark Side!

Continuing my blog series from SQL Saturday #132, I wanted to review one piece of good advice I received from the pre-conference training on Friday prior to the event. The pre-con titled “Demystifying Database Administration Best Practices” was presented by Microsoft Certified Masters Robert Davis (twitter|blog) and Argenis Fernandez (twitter|blog).

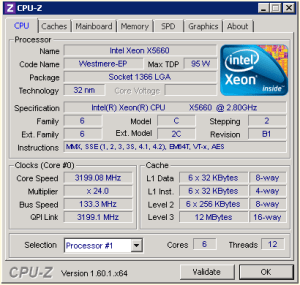

The default power setting for a new server is balanced as shown in the image above. This is great when you are trying to achieve a green workplace. But did you realize that you purchased hardware and now you are not using it to its full power? Why would you purchase a powerful server and then not use the server to its full capacity? Why not just buy a less powerful server if that is the goal?

Want to see the difference in the balance power plan and the high performance? Download the freeware CPU-Z utility from CPUID. This is a great utility for the administrator for more reasons than just this one as it gives realtime analysis for your CPU, memory, and graphics.

Check your servers, you may be running short on some power. Stay tuned for more items in our next installment. Enjoy!